The out-of-cycle, surprising German federal election of 2025 has ended. I call this the untethered election because my model, which mixes a prediction based on fundamentals with a polling average, saw big differences between the two. These differences were much bigger than in past election cycles (I wrote a whole post about why I think that might be the case).

Just like I said in 2021, I owe it to the few, incredibly smart people who read this blog to do a proper evaluation of how well my model did. So here we go.

The overall verdict of my model: it got the big picture right with some notable misses.

Let’s get into what I got right and where my model needs some work.

Biggest win: I got the final election result right

I predicted back in December that we would end up with a grand coalition. Though the negotiations between the CDU/CSU and the SPD have yet to begin, the fact that all notable representatives of both parties are pushing for this governing coalition already, and the fact that it’s numerically possible, is a win for my model (and my analysis).

Back in December, I had the CDU/CSU at 31.6% (I was off by 3.1% but closer than the polls at that point) and the SPD at 16.9% (only 0.5% off from the final result, much closer than the polls). My model expected the SPD to beat their polls. Though their final result was still their worst ever electoral performance, they did over perform their polls slightly, which my model suggested would be the case throughout the entire election cycle.

My model’s predictions gave me the confidence to declare back then that “A grand coalition between the CDU/CSU and SPD is the most likely outcome of this election and also more likely than the polls currently suggest”. I was dead on with that prediction.

Below you can find my predictions from December 5th, 2024.

| Party | 2-week polling average | Vorcast model predictions |

| CDU/CSU | 32.3 | 31.6 |

| SPD | 15.5 | 16.9 |

| AFD | 18.2 | 15.8 |

| Die Grünen | 12.2 | 9.6 |

| FDP | 4.1 | 5.9 |

| BSW | 6.2 | 5.5 |

| Die Linke | 3.1 | 4.2 |

My model’s predictions made me confident that this would be the final outcome. I ended up being more correct than, for example, the prediction model of Die Zeit. They did a fantastic job with their visualizations and extensive data journalism of the election. My only critique would be that they played it excessively safe by only predicting possible, rather than likely coalition constellations. This lead them to predict, even on February 13th, that a CDU/CSU, SPD, and Green Party coalition was the most likely.

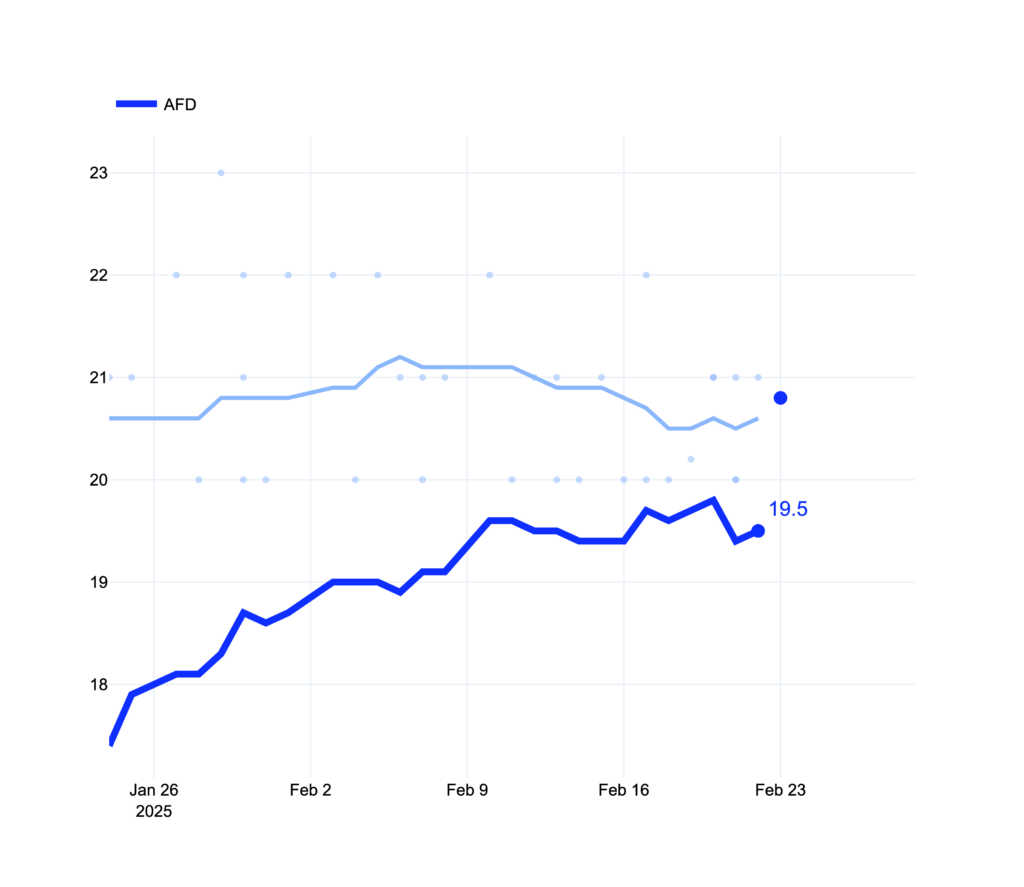

Biggest miss: The AFD finished as strong as the polls predicted

My model was very skeptical of the AFD’s polling numbers all throughout the election cycle, but they finished pretty much exactly where a simple polling average predicted they would. When it comes time for my model’s next iteration, this is definitely one of the top misses I’ll have to address. Simply put, the AFD beat their fundamentals (or they’re not tethered to them).

I saw some people comment that the AFD didn’t outperform their polls so the support they received from the Trump administration and most vocally, Elon Musk, didn’t materialize in extra votes. My model actually suggests that they might have finished a good bit weaker, so perhaps their American supporters actually helped them keep their numbers up. It’s impossible to prove either theory. Anyway, my model missed the mark on this one.

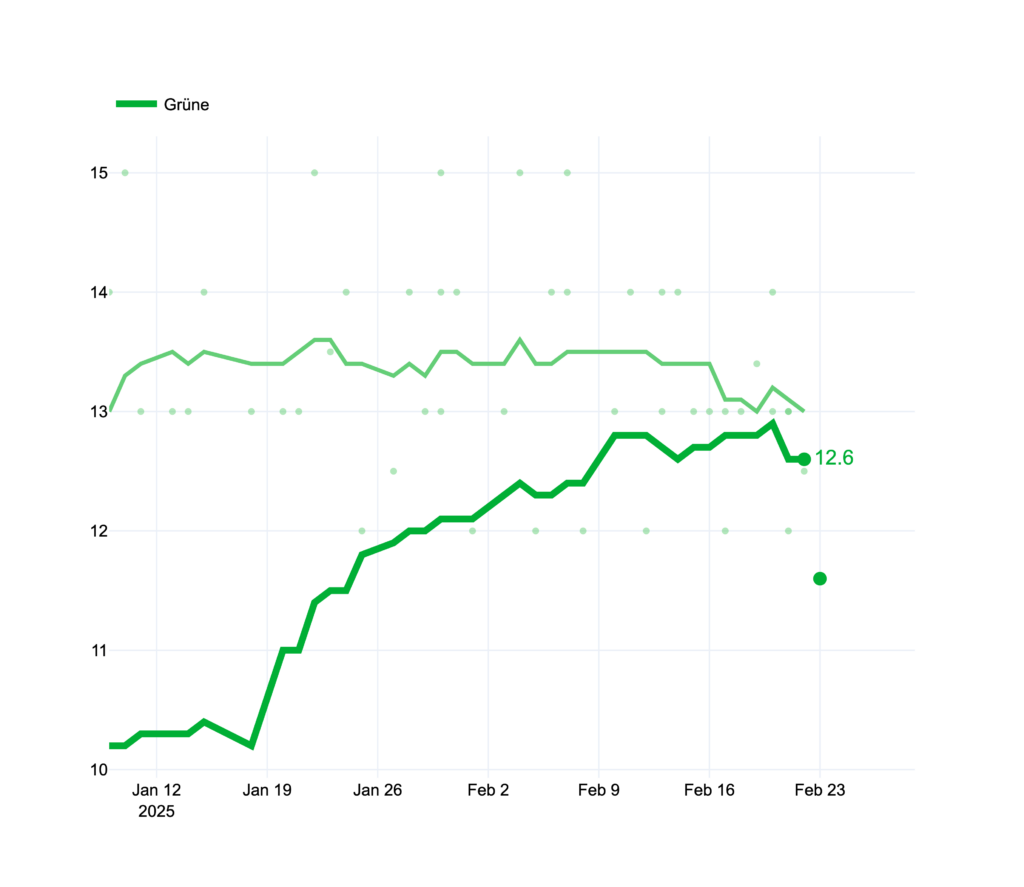

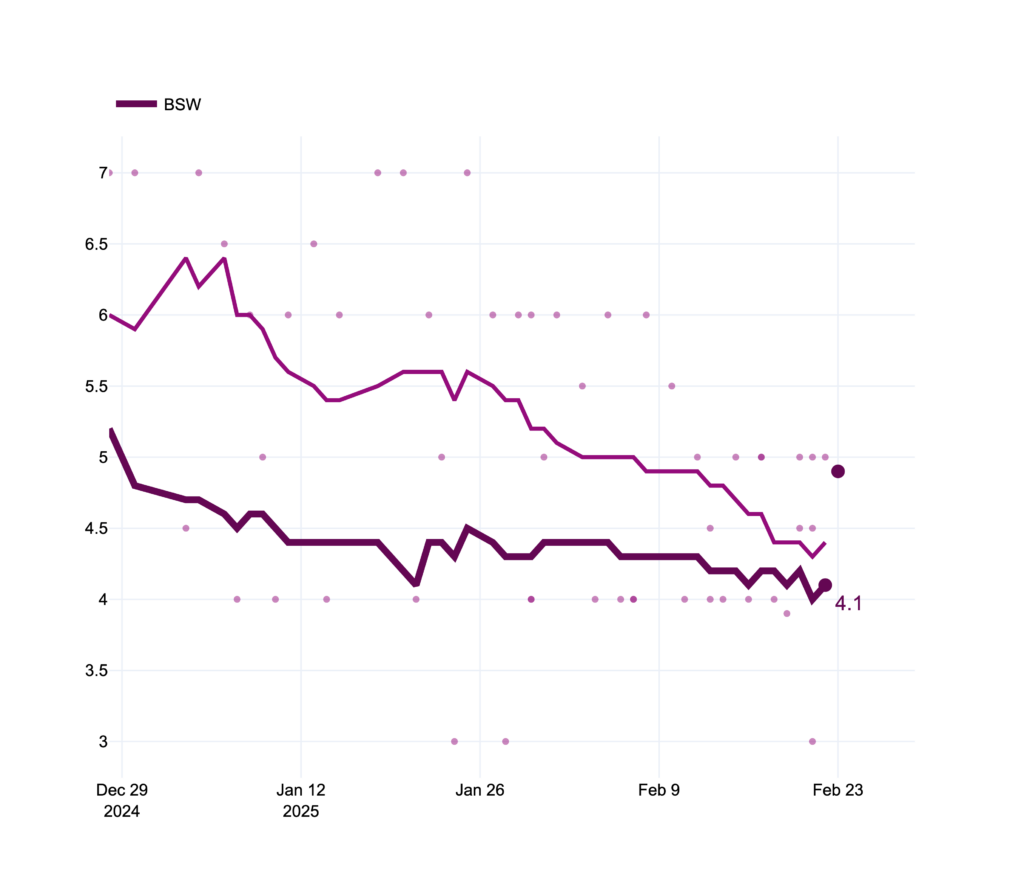

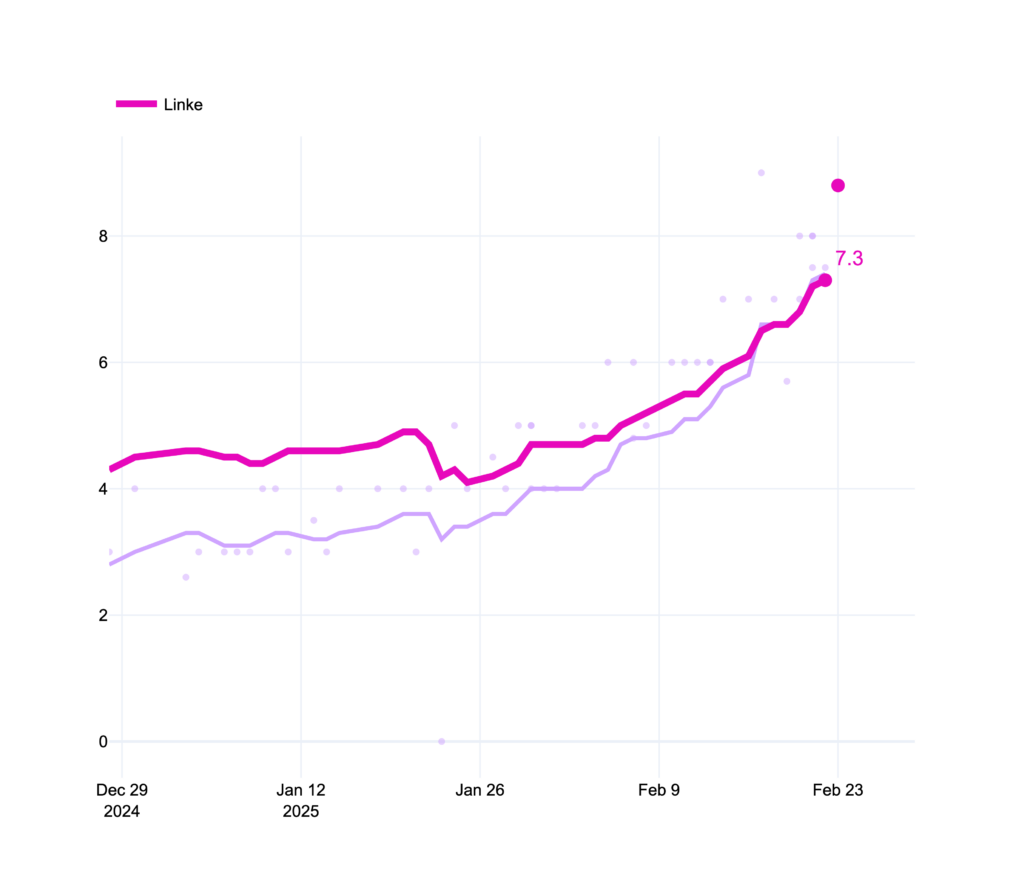

Other wins: Die Grünen, die Linke and the BSW

Spare a thought for the Green party. They underperformed the polls badly once again. This is a recurring trend in the last few German elections. My model predicted this correctly all throughout the final 1.5 months of the election.

Similarly, my model was consistently skeptical of the BSW’s short-lived popularity. They dipped below the all-important 5% threshold in my model in January and remained there until the election. The polls took until mid-February to give them similar numbers. They ended up just below the 5% threshold.

A smaller win for my model was that it remained a bit more bearish than the polls on Die Linke which also paid off. Though my model didn’t anticipate their late surge (no one did), it did anticipate their reentry a week before the polls.

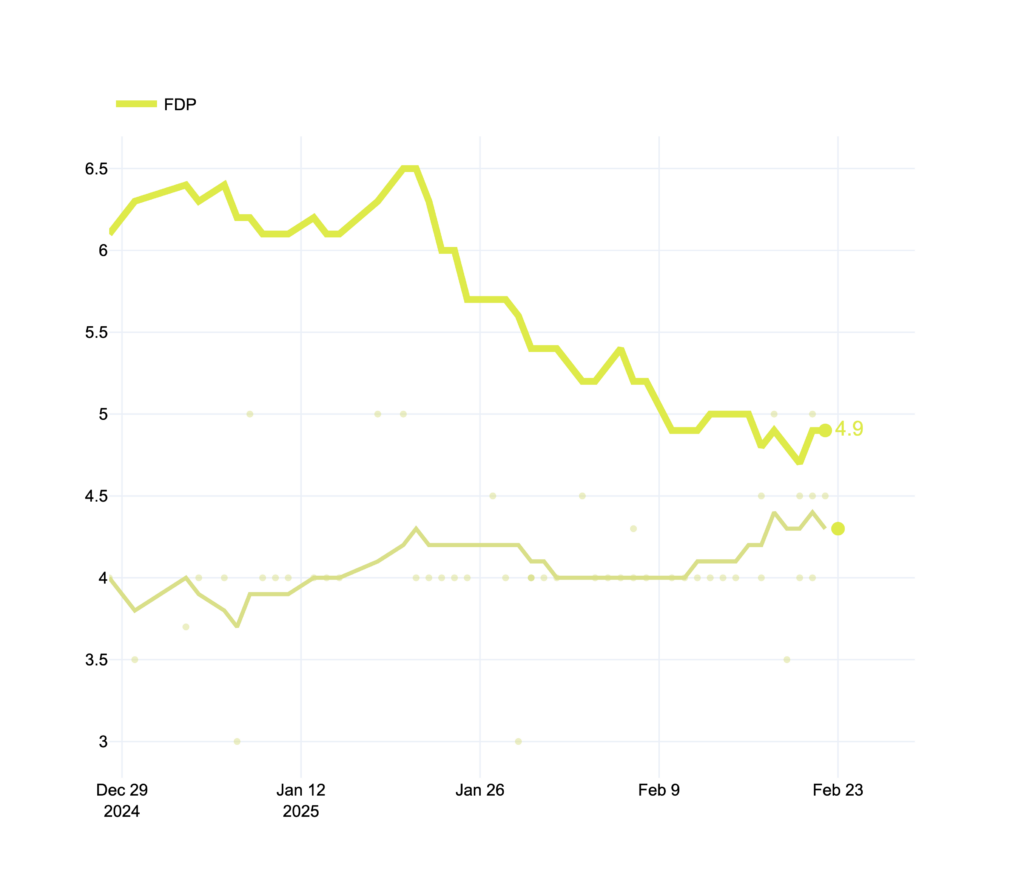

The FDP underperformed their fundamentals but not their polls

As prescient as my model was in regards to some of the outcomes discussed above, its bearishness on the FDP did not pay off. Though the polls rarely managed to hit 5% in the polls, my model was convinced that they could pull off a late victory. It took my model until the final 2 weeks before the election to predict their eventual exit from the Bundestag.

Was Vorcast useful?

Since the last US Presidential election, I’ve been thinking a lot about the utility of election models. All major prediction publications (Nate Silver‘s, The Economist’s and 538’s) basically had the election as a 50:50 coin toss. Now all of these modelers will explain to you (in way more words) that though the outcome was uncertain, it didn’t mean that the eventual result would be close and that theirs are probabilistic forecasts. I’m one of those people who read the methodology pages thoroughly and geeks out about how these models work. I love that they exist. But I also have to admit that none of these models were very helpful for the 2024 presidential election.

I think the simple fact is that most people who consume election models want to know one thing: How is the election gonna go? Who’s gonna win? What will the future look like?

I believe my model answered that question correctly, even as far back as in early December. But that doesn’t mean that my model was successful across the board, far from it.

The German federal election of 2025 took me and most of Germany by surprise. It happened 7 months earlier than it should have because in early November, the governing coalition fell apart. I made the conscious decision to try to release my model early rather than rework it too much. I believe it ultimately helped me assess the German electorate better than if I didn’t have it, and I hope the same is true for you.

Nonetheless, there were some big misses that I’ll have to address in a future version. Some obvious improvements come to mind:

- My polling average was too much calibrated on the 2021 election, specifically putting too much weight on the Allensbach Institute, which was the worst performing polling institute in 2025

- Candidate popularity is a feature I’ve long wanted to incorporate

- I want to include additional economic indicators in my fundamentals forecast

Vorcast is an incredibly fun and rewarding hobby of mine, but it is a hobby nonetheless and not my day job. In fact, it’s not even my only demanding side project next to my full-time job.

Hopefully the next election cycle is announced earlier or happens on time so I can prepare a bit better. Until then, thank you so much for reading. If you have questions or comments, you can comment directly on this post or reach out on Bluesky or X.

See you at the next Bundestagswahl.